Preface#

Previously, I manually refreshed the CDN cache of the entire blog site through the DogeCloud Console. The CDN origin is the URL deployed by Vercel.

Inspired by the article Empty Dream: Fully Automated Blog Deployment Solution, I had the idea of automation. These CDN service providers all provide API documentation and code examples that can be used out of the box.

Repository example: https://github.com/mycpen/blog/tree/main/.github

Personal Example#

1. Add Workflow File#

Taking myself as an example, add the file source/.github/workflows/refresh-dogecloud.yml with the following content:

# This workflow will install Python dependencies, run tests and lint with a single version of Python

# For more information see: https://docs.github.com/en/actions/automating-builds-and-tests/building-and-testing-python

name: Refresh dogecloud CDN

on:

push:

branches:

- main

workflow_dispatch:

jobs:

build:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v3

- uses: szenius/set-timezone@v1.0 # Set the timezone of the execution environment

with:

timezoneLinux: "Asia/Shanghai"

- name: Set up Python 3.10

uses: actions/setup-python@v3

with:

python-version: "3.10"

- name: Wait for 3 minutes

run: sleep 180 # Wait for 3 minutes, in seconds

- name: Run refresh script

env:

ACCESS_KEY: ${{ secrets.ACCESS_KEY }}

SECRET_KEY: ${{ secrets.SECRET_KEY }}

run: |

pip install requests

python .github/refresh-dogecloud.py

2. Add PY Script: Refresh Cache#

Taking myself as an example, add the file source/.github/refresh-dogecloud.py with the following content:

The url_list in the script is the directory that needs to be refreshed, which needs to be manually modified. The code is from DogeCloud API Authentication and Refresh Directory Task

from hashlib import sha1

import hmac

import requests

import json

import urllib

import os

def dogecloud_api(api_path, data={}, json_mode=False):

"""

Call DogeCloud API

:param api_path: The API interface address to be called, including URL request parameters QueryString, for example: /console/vfetch/add.json?url=xxx&a=1&b=2

:param data: POST data, dictionary, for example {'a': 1, 'b': 2}, passing this parameter indicates that it is not a GET request but a POST request

:param json_mode: Whether the data is requested in JSON format, default is false, which means to use form format (a=1&b=2)

:type api_path: string

:type data: dict

:type json_mode bool

:return dict: Returned data

"""

# Replace with your DogeCloud permanent AccessKey and SecretKey, which can be viewed in User Center - Key Management

# Do not expose AccessKey and SecretKey on the client, otherwise malicious users will gain full control of the account

access_key = os.environ["ACCESS_KEY"]

secret_key = os.environ["SECRET_KEY"]

body = ''

mime = ''

if json_mode:

body = json.dumps(data)

mime = 'application/json'

else:

body = urllib.parse.urlencode(data) # Python 2 can use urllib.urlencode directly

mime = 'application/x-www-form-urlencoded'

sign_str = api_path + "\n" + body

signed_data = hmac.new(secret_key.encode('utf-8'), sign_str.encode('utf-8'), sha1)

sign = signed_data.digest().hex()

authorization = 'TOKEN ' + access_key + ':' + sign

response = requests.post('https://api.dogecloud.com' + api_path, data=body, headers = {

'Authorization': authorization,

'Content-Type': mime

})

return response.json()

url_list = [

"https://cpen.top/",

]

api = dogecloud_api('/cdn/refresh/add.json', {

'rtype': 'path',

'urls': json.dumps(url_list)

})

if api['code'] == 200:

print(api['data']['task_id'])

else:

print("api failed: " + api['msg']) # Failed

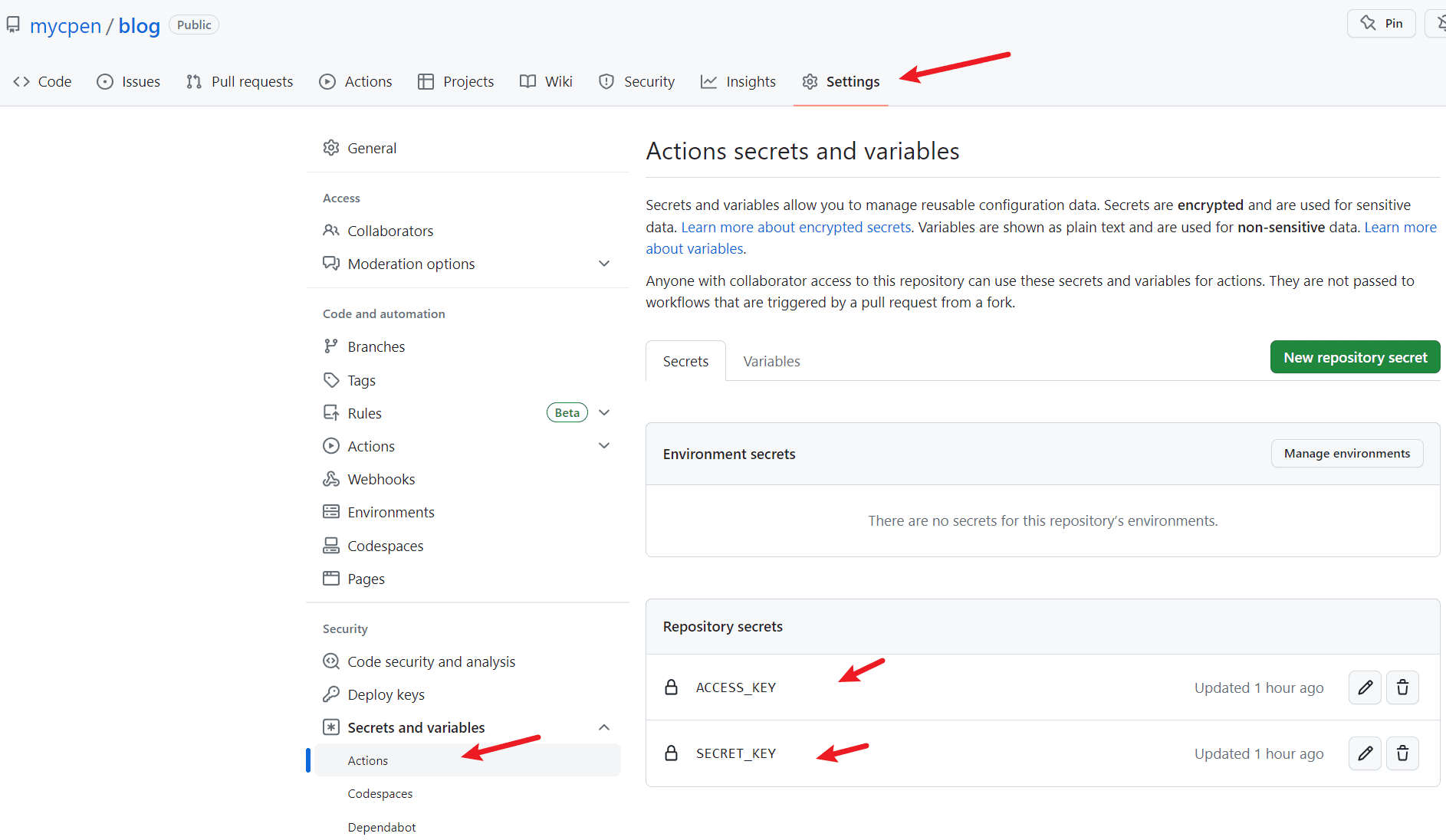

3. Add Secrets Variables#

Add 2 Secrets variables in Actions, ACCESS_KEY and SECRET_KEY, and their values can be viewed in DogeCloud User Center - Key Management. link

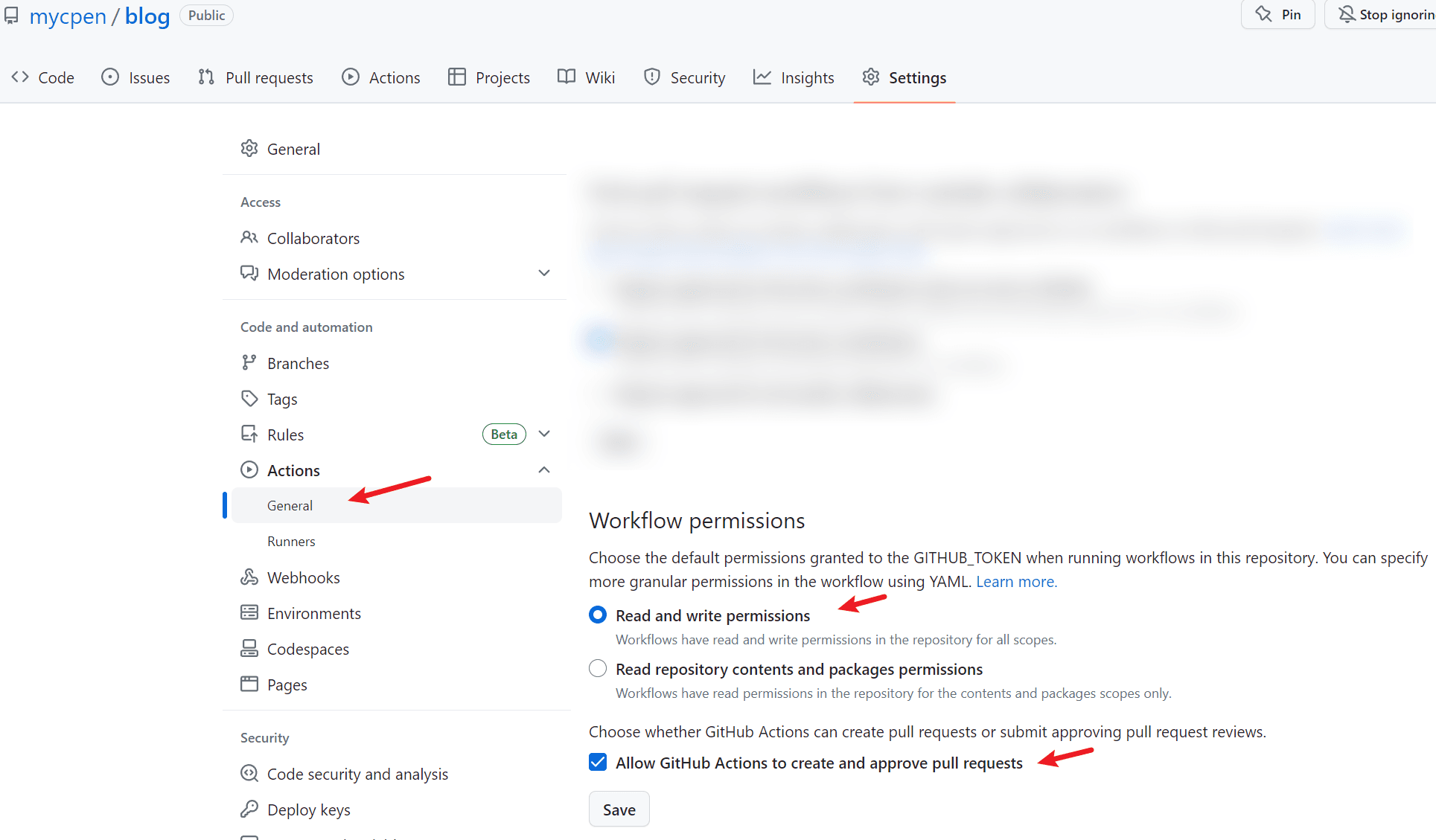

To avoid workflow execution failure due to permission issues, you can also set the Actions read and write permissions

4. Add JS Code: Copy .github#

Preface: .github and other hidden files starting with . are not rendered and generated to the public directory by default when hexo generate is executed. Most of the solutions found online and provided by ChatGPT have been tried (such as skip_render), but they did not work. I plan to directly copy the source/.github directory to the public/.github directory using JS code. The following code achieves the copying effect after each hexo generate.

Taking myself as an example, add the file scripts/before_generate.js with the following content:

const fs = require('fs-extra');

const path = require('path');

function copyFolder(source, target) {

// Create the target folder if it doesn't exist

fs.ensureDirSync(target);

// Get the list of files in the source folder

const files = fs.readdirSync(source);

// Loop through each file and copy it to the target folder

files.forEach((file) => {

const sourcePath = path.join(source, file);

const targetPath = path.join(target, file);

if (fs.statSync(sourcePath).isDirectory()) {

// Recursively copy subfolders

copyFolder(sourcePath, targetPath);

} else {

// Copy the file

fs.copyFileSync(sourcePath, targetPath);

}

});

}

copyFolder('./source/.github', './public/.github');

5. Update _config.yml Configuration#

When

hexo deploypushes the rendered static repository to GitHub, it will cancel the push of hidden files starting with.by default, as follows:

Modify the Hexo configuration file _config.yml and add the ignore_hidden configuration item for the git deployer, as shown below:

# Deployment

## Docs: https://hexo.io/docs/one-command-deployment

deploy:

- type: git

repository: git@github.com:mycpen/blog.git

branch: main

commit: Site updated

message: "{{ now('YYYY/MM/DD') }}"

+ ignore_hidden: false

Reference Links#

- DogeCloud: API Authentication Mechanism (Python)

- DogeCloud: SDK Documentation - Refresh and Preload

- ChatGPT (deploy.ignore_hidden, js code to copy directories, sleep)